08. Quiz: TensorFlow ReLu

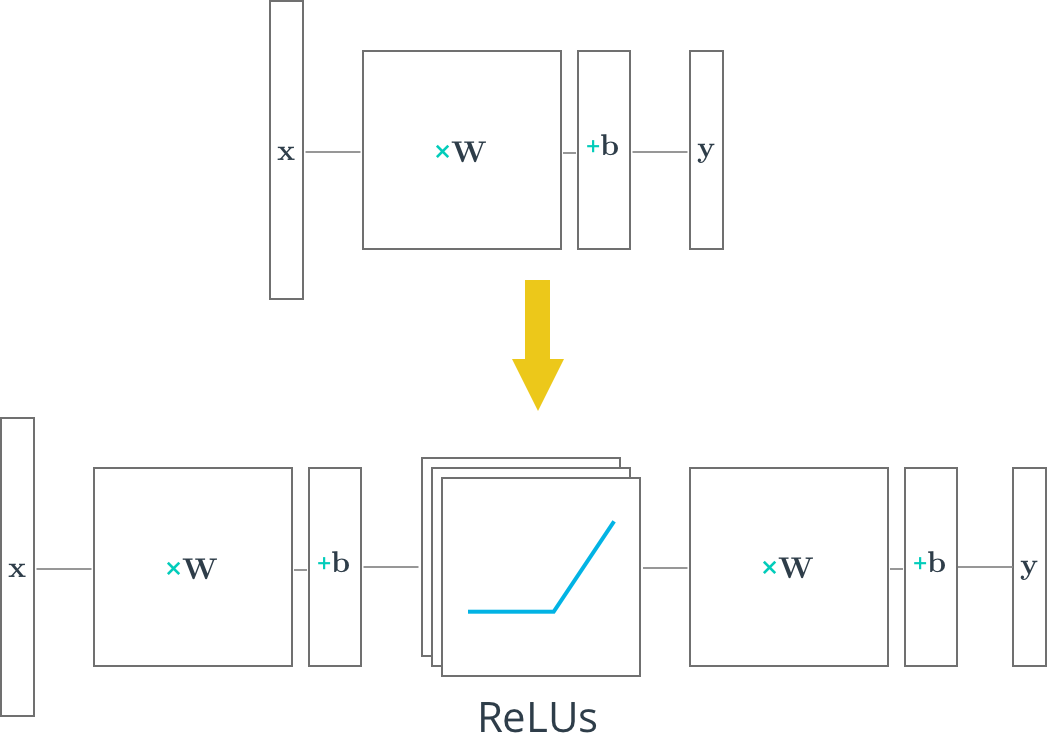

A Rectified linear unit (ReLU) is type of

activation function

that is defined as

f(x) = max(0, x)

. The function returns 0 if

x

is negative, otherwise it returns

x

. TensorFlow provides the ReLU function as

tf.nn.relu()

, as shown below.

# Hidden Layer with ReLU activation function

hidden_layer = tf.add(tf.matmul(features, hidden_weights), hidden_biases)

hidden_layer = tf.nn.relu(hidden_layer)

output = tf.add(tf.matmul(hidden_layer, output_weights), output_biases)

The above code applies the

tf.nn.relu()

function to the

hidden_layer

, effectively turning off any negative weights and acting like an on/off switch. Adding additional layers, like the

output

layer, after an activation function turns the model into a nonlinear function. This nonlinearity allows the network to solve more complex problems.

Quiz

In this quiz, you'll use TensorFlow's ReLU function to turn the linear model below into a nonlinear model.

Workspace

This section contains either a workspace (it can be a Jupyter Notebook workspace or an online code editor work space, etc.) and it cannot be automatically downloaded to be generated here. Please access the classroom with your account and manually download the workspace to your local machine. Note that for some courses, Udacity upload the workspace files onto https://github.com/udacity , so you may be able to download them there.

Workspace Information:

- Default file path:

- Workspace type: jupyter

- Opened files (when workspace is loaded): n/a